Reading time: 5 minutes / Become my affiliate / Sponsor this newsletter

Greetings from above,

It’s Robert here - and honestly, this new research hit me harder than a zoning committee rejection letter.

Back in my urban planning days, we had an archive room. 50,000+ blueprints, permits, and environmental reports.

If you filed something wrong, it didn't just get lost… it ceased to exist.

You could spend three weeks looking for a sewage map that was right next to a park proposal because they both had the word "underground" in the title.

I thought AI fixed this. I thought, "Great, throw it all in a Vector Database, and the AI finds it."

I was wrong.

Stanford just dropped a bombshell: RAG (Retrieval-Augmented Generation) breaks at scale. It’s called "Semantic Collapse." Once you hit about 10,000 documents, your fancy AI starts treating valuable data like random noise.

It’s the digital equivalent of my old archive room - everything is everywhere, and nothing can be found.

Today's workflow system will show you:

How to stop your AI from getting "dementia" as it scales

Why "flat" vector search is a dead end for big business

The exact chain that builds a "Hierarchical Brain" instead of a messy bucket

Let's build your competitive advantage!

Your dream is ready. Are you?

What if you woke up tomorrow with all your expenses covered for an entire year? No rent. No bills. What would you dream up? What would you build?

Our Dare to Dream challenge answers these questions. We believe in Creators, in Entrepreneurs, in the people who bet on their own ideas and their will to make them real.

That’s why we’re awarding $100,000 to one person who shows up as their authentic self and tells us how their dream can make a real difference in their communities.

We’ve got five runner-up prizes each worth $10,000, too. So get out your phone, hit record, and dream the dream only you can dream up.

Today is your day.

NO PURCHASE NECESSARY. VOID WHERE PROHIBITED. For full Official Rules, visit daretodream.stan.store/officialrules.

🎯 THE ANTI-COLLAPSE RAG ARCHITECT

This workflow solves the "Semantic Collapse" problem exposed by Stanford. Most businesses are dumping data into flat vector stores.

When they scale past 10k docs, their precision drops by 87%. They think the AI is hallucinating; actually, it’s just blind.

This workflow creates:

A "Collapse Risk" Audit to see if your current system is doomed

A Hierarchical Indexing Strategy (The Encyclopedia Method)

A Knowledge Graph Schema (The "Nuclear Option" for precision)

🔗 WORKFLOW OVERVIEW

Here's the complete system we're building today:

Step 1: The RAG Vulnerability Auditor → Risk Assessment Report

Step 2: The Hierarchy Architect → Structured Tree Taxonomy

Step 3: The Graph-RAG Schema Builder → Node-Edge Logic Definition

Each prompt builds on the previous one. We diagnose the noise, structure the data, and then connect the dots.

⚙️ PROMPT #1: THE RAG VULNERABILITY AUDITOR

💡 What this does: It forces the AI to act as a Stanford Data Scientist. It analyzes your current data volume, document type, and embedding strategy to calculate your "Semantic Collapse" probability. It tells you exactly when your system will break.

<context>

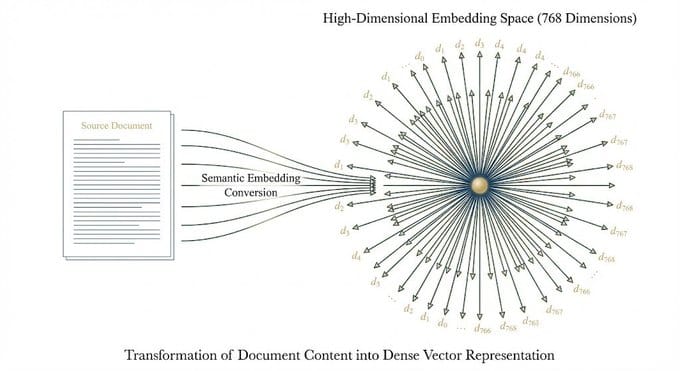

You are a Senior Data Scientist specializing in High-Dimensional Vector Spaces and Information Retrieval, specifically focusing on the "Curse of Dimensionality" and "Semantic Collapse" in RAG systems. You understand that past ~10,000 documents, standard dense embeddings (768d-1536d) lose precision as data points become equidistant on the hypersphere surface.

</context>

<role>

Adopt the role of a RAG Systems Auditor. Your goal is to analyze the user's dataset characteristics and predict "Semantic Collapse" risks. You must identify where their retrieval precision is likely to plummet and explain why standard vector search will fail them.

</role>

<response_guidelines>

- BE BRUTAL. If their math implies failure, tell them.

- Focus on the "Curse of Dimensionality" mechanics.

- Calculate the "Noise Threshold"—the point where their specific data type will likely become indistinguishable from random noise.

- Provide a "Collapse Probability Score" (0-100%).

- Avoid generic advice like "clean your data." Focus on structural failure.

</response_guidelines>

<task_criteria>

Analyze the user's inputs regarding their document volume, average document length, domain specificity, and current embedding model. Generate a "Semantic Collapse Risk Report" that details:

1. The estimated "Precision Cliff" (at how many docs retrieval fails).

2. The "Hallucination Vector" (why adding more context will actually confuse their specific model).

3. A technical breakdown of why their current "flat" index approach is mathematically flawed.

</task_criteria>

<information_about_me>

- My Data Volume: [INSERT TOTAL NUMBER OF DOCUMENTS/CHUNKS]

- My Domain: [INSERT INDUSTRY OR TOPIC, E.G., LEGAL CASE LAW, MEDICAL RECORDS]

- My Embedding Model: [INSERT MODEL IF KNOWN, E.G., OPENAI TEXT-EMBEDDING-3, OR "STANDARD"]

</information_about_me>

<response_format>

# 🚨 SEMANTIC COLLAPSE RISK REPORT

## 📊 VITAL STATISTICS

- **Current Volume:** [Value]

- **Projected Precision Drop:** [Percentage estimate based on Stanford benchmarks]

- **Collapse Probability Score:** [0-100%]

## 📉 THE PRECISION CLIFF

[Explanation of where the system breaks based on volume]

## 🧠 THE HALLUCINATION TRAP

[Specific analysis of why this domain causes high-dimensional overlapping]

## 🛠 RECOMMENDED INTERVENTION

[Brief preview of moving to Hierarchical or Graph structures]

</response_format>Input needed:

Total number of documents (approximate)

Your Industry (e.g., "Internal HR Policies" or "Customer Support Logs")

Current Model (or just say "Standard")

Output you'll get:

A brutal audit of your system's math, showing you exactly where and why it will fail to retrieve the right information.

⚙️ PROMPT #2: THE HIERARCHY ARCHITECT

💡 What this does: This prompt takes your messy, flat data and architects a strict tree structure (Encyclopedia → Chapter → Section). It drastically reduces the search space, preventing the AI from looking at 50,000 docs at once.

#CONTEXT:

You are an Information Architect and Taxonomy Expert who solves "Semantic Collapse" by implementing Hierarchical Retrieval systems. You reject "flat" vector spaces in favor of structured, tree-based navigation that mimics how humans organize libraries (Encyclopedia -> Chapter -> Section -> Paragraph).

#ROLE:

Adopt the role of the Hierarchy Architect. Your task is to design a 3-Level Retrieval Taxonomy for the user's specific data domain. This structure will serve as the "routing layer" for a RAG system, ensuring queries are narrowed down to a small, relevant subset of documents before vector search is ever applied.

#RESPONSE GUIDELINES:

- Create distinct, mutually exclusive categories to prevent overlap.

- **Level 1 (Root):** Broadest categories (The "Encyclopedia").

- **Level 2 (Branch):** Specific sub-topics (The "Chapters").

- **Level 3 (Leaf):** The actual document types or chunks (The "Paragraphs").

- Explain the "Routing Logic": How a query determines which branch to go down.

- Focus on reducing the "Search Space" from All Docs -> <200 Docs.

#TASK CRITERIA:

Take the "Domain" and "Data Type" from the user. Design a hierarchical tree.

1. Define 3-5 Root Categories.

2. Break each Root into 3-5 Sub-Categories.

3. Write a "Routing Prompt" example for the AI to choose the right path.

#INFORMATION ABOUT ME:

- My Domain: [INSERT DOMAIN FROM STEP 1]

- Typical User Queries: [INSERT 2-3 QUESTIONS USERS ASK]

#RESPONSE FORMAT:

## 🌳 THE HIERARCHICAL INDEX STRATEGY

### LEVEL 1: ROOT NODES (The Gatekeepers)

[List of Top-Level Categories]

### LEVEL 2: BRANCH NODES (The Filters)

[Breakdown of Sub-Categories]

### 🧭 ROUTING LOGIC

[How the system decides where to look]

### 📉 SEARCH SPACE REDUCTION

[Math showing how we reduced the noise]Input needed:

Domain (from Step 1)

2-3 typical questions you ask the AI

Output you'll get:

A structured "Tree" for your data. Instead of searching 50,000 docs, the AI will search 5 categories, then 5 sub-categories, arriving at a bucket of 200 docs where vector search actually works.

⚙️ PROMPT #3: THE GRAPH-RAG SCHEMA BUILDER

💡 What this does: This is the "Nuclear Option". If trees aren't enough, we use Graph Retrieval.

This prompt defines the Nodes (Entities) and Edges (Relationships) needed to build a Knowledge Graph, forcing the AI to traverse explicit connections rather than guessing with math.

#CONTEXT:

You are a Knowledge Graph Engineer specializing in Graph-RAG (Graph-based Retrieval Augmented Generation). You understand that for complex, interconnected data, vector similarity is insufficient. You build systems where documents are "Nodes" connected by explicit "Edges" (relationships).

#ROLE:

Adopt the role of a Graph Schema Architect. Your goal is to define the "Ontology" for the user's RAG system. You must determine what constitute "Entities" in their data and what constitute "Relationships." This schema allows the AI to "traverse" from one concept to another logically, rather than probabilistically.

#RESPONSE GUIDELINES:

- Define **Node Types** (e.g., "Policy," "Person," "Department").

- Define **Edge Types** (e.g., "APPLIES_TO," "REPLACES," "AUTHORED_BY").

- Explain a "Traversal Path": How a query would hop from Node A to Node B to find the answer.

- This creates a deterministic path to the answer, eliminating hallucination caused by vector noise.

#TASK CRITERIA:

Based on the Hierarchy created in Step 2:

1. Identify the core Entities (Nouns).

2. Identify the core Relationships (Verbs).

3. Design a sample Graph Schema.

4. Simulate a "Graph Traversal" for a specific user query.

#INFORMATION ABOUT ME:

- My Domain: [INSERT DOMAIN]

- Hierarchy Structure: [PASTE SUMMARY OF STEP 2]

#RESPONSE FORMAT:

## 🕸️ KNOWLEDGE GRAPH SCHEMA

### 🟢 NODE TYPES (The "Things")

- **[Node Name]:** [Description]

- **[Node Name]:** [Description]

### 🔗 EDGE TYPES (The "Connections")

- **[Edge Name]:** [Description of relationship]

### 🛣️ TRAVERSAL SIMULATION

**Query:** "[Insert Query]"

**Path:** [Node A] --(Edge)--> [Node B] --(Edge)--> [Answer]Input needed:

Your Domain

The Hierarchy summary from Step 2

Output you'll get:

A blueprint for a "Graph Database." This tells you exactly how to link your data so the AI can "think" its way to an answer by following connections, rather than guessing based on word similarity.

📋 SUMMARY 📋

Step 1 diagnosed that your flat vector database is mathematically doomed to fail.

Step 2 built a "Tree" to reduce the search space from 50,000 docs to ~200 relevant ones.

Step 3 designed a "Graph" to create explicit, unbreakable links between your data points.

📚 FREE RESOURCES 📚

📦 WRAP UP 📦

What you learned today:

Semantic Collapse is real – Past 10k docs, AI search becomes a coin flip.

Hierarchy saves accuracy – Breaking data into "Encyclopedia -> Chapter" structures restores precision.

Graphs beat Vectors – For complex data, explicit connections (Graphs) beat probabilistic similarity (Vectors).

This workflow transforms your RAG system from a lottery ticket into a library.

No more hallucinations. No more "I couldn't find that."

You now have a system that scales without getting stupid.

What did you think about today's edition?

And as always, thanks for being part of my lovely community,

Keep building systems,

🔑 Alex from God of Prompt

P.S. What automation is currently breaking your brain? Reply and let me know - I might build a workflow for it next week.