Reading time: 5 minutes / Become my affiliate / Try my new extension

Greetings from above,

Trying to use ChatGPT for everything is like trying to eat soup with a fork.

You can do it, but you'll starve before you finish.

ROBERT'S STORY: I remember trying to build my first "autonomous city planner" agent back in early 2024. I was lazy. I fed standard ChatGPT a 50-page zoning code, a satellite image, and asked for a 3D model.

It hallucinated a parking lot in the middle of a lake and told me the "vibes" were off. I realized asking a text generator to do spatial reasoning is like asking a fish to climb a tree.

I used a VLM for the maps, an LRM for the logic, and a tiny SLM for the specific regulations. Suddenly? The system worked.

Today's workflow system will show you:

How to stop overpaying for "dumb" AI tasks

The 8-model framework that Google and Anthropic actually use

The exact chain to build a "Multi-Architecture" Agent that doesn't hallucinate

Let's build your competitive advantage!

Stop Duplicates & Amazon Resellers Before They Strike

Protect your brand from repeat offenders. KeepCart detects and blocks shoppers who create duplicate accounts to exploit discounts or resell on Amazon — catching them by email, IP, and address matching before they hurt your bottom line.

Join DTC brands like Blueland and Prep SOS who’ve reclaimed their margin with KeepCart.

🎯 THE MULTI-MODEL ORCHESTRATOR SYSTEM

Most "AI Agents" fail because people force a Chatbot (GPT) to be a Mathematician, Eyes, and Robot all at once.

This workflow implements the HLM (Hierarchical Language Model) approach. We don't just ask one model to do it all.

We break your business task into "Cognitive Components" and assign the exact right brain architecture (VLM, LRM, SLM, etc.) to handle it.

This workflow creates:

Cost Efficiency: Routes simple tasks to cheaper models (MoE/SLM)

Higher Accuracy: Uses Reasoning Models (LRM) only when logic is needed

Visual Intelligence: Activates Vision Models (VLM) for image tasks

🔗 WORKFLOW OVERVIEW

Here's the complete system we're building today:

Step 1: The Task Autopsy → Cognitive Breakdown (Splits the job into parts)

Step 2: The Architecture Matchmaker → Model Assignments (Assigns the specialist)

Step 3: The HLM Manager Prompt → The Master Script (The prompt that runs the agents)

Each prompt builds on the previous one. No gaps. No guesswork.

⚙️ PROMPT #1: THE TASK AUTOPSY

💡 What this prompt does: It takes your complex goal (e.g., "Automate my invoice processing") and dissects it. It identifies which parts require eyes (Vision), which require complex logic (Reasoning), and which are just simple text tasks.

This prevents you from wasting expensive compute on simple tasks.

#CONTEXT:

You are a Senior AI Systems Architect specializing in "Cognitive Task Decomposition." You understand that large business goals often fail in AI because they are treated as one massive prompt. Your expertise lies in breaking down complex workflows into isolated, atomic "cognitive steps" (e.g., Visual Perception, Logical Reasoning, Creative Generation, Action Execution).

#ROLE:

Adopt the role of an expert AI Systems Architect. Your goal is to analyze the user's workflow and dissect it into specific, isolated sub-tasks that can be assigned to specialized AI models.

#RESPONSE GUIDELINES:

1. **Analyze the Input:** Read the user's desired workflow thoroughly.

2. **Deconstruct:** Break the workflow into 3-10 atomic steps.

3. **Identify Cognitive Load:** For EACH step, identify the primary "brain function" needed:

* **Visual:** Needs to see/read an image/UI.

* **Reasoning:** Needs complex logic, math, or multi-step deduction.

* **Creative/Conversational:** Needs fluent text generation.

* **Action:** Needs to click, type, or use an API.

* **Niche/Repetitive:** A specific, narrow rule-set (perfect for small models).

4. **Format:** Output a structured table.

#TASK CRITERIA:

- Do not solve the task. Only break it down.

- Be ruthless in isolating "Action" from "Reasoning."

- If a step involves looking at a document/screen, flag it as VISUAL.

#INFORMATION ABOUT ME:

- My Workflow/Goal: [INSERT YOUR COMPLEX TASK HERE - e.g., "I want to automate reading PDF invoices, checking them against a spreadsheet, and emailing the client if there's an error."]

#RESPONSE FORMAT:

Provide a Markdown Table with columns: Step ID, Description, Primary Cognitive Type (Visual/Reasoning/Creative/Action).Input needed:

Your complex task (e.g., "Scrape my competitor's pricing from screenshots and update my database.")

Output you'll get:

A clear breakdown identifying that "Scraping screenshots" is a Visual task, while "Comparing prices" is a Reasoning task.

⚙️ PROMPT #2: THE ARCHITECTURE MATCHMAKER

💡 What this prompt does: Now that we know what needs to happen, we assign the specific AI architecture.

We don't use GPT for everything. We use the 8-Model Framework (MoE, LRM, VLM, SLM, etc.) to pick the perfect tool for each step.

#CONTEXT:

You are a Lead AI Engineer who understands the "8 LLM Architectures" framework. You know that single-model agents are inefficient. You optimize for cost, speed, and accuracy by routing tasks to the correct architecture:

1. **GPT:** General conversation (Customer support).

2. **MoE (Mixture of Experts):** Fast, cheap intelligence (Mixtral/Grok).

3. **LRM (Large Reasoning Model):** Complex logic/Math (o1/Strawberry).

4. **VLM (Vision-Language Model):** Seeing images (Claude Vision/Gemini).

5. **SLM (Small Language Model):** Niche, fast tasks (Llama 3 8B).

6. **LAM (Large Action Model):** Executing tools/APIs.

7. **HLM (Hierarchical Language Model):** Planning/Management.

8. **LCM (Large Concept Model):** Abstract knowledge graphs.

#ROLE:

Adopt the role of an expert AI Model Router. Your goal is to take the "Cognitive Breakdown" from the previous step and assign the specific Architecture (and a real-world model example) to each step.

#RESPONSE GUIDELINES:

1. Review the Cognitive Type for each step.

2. **Match:**

* Visual -> VLM (e.g., Gemini Pro Vision)

* Reasoning -> LRM (e.g., OpenAI o1)

* Action -> LAM (e.g., Adept/Custom Tool Use)

* Creative/Simple -> MoE (e.g., Haiku/Flash) for cost savings.

3. **Justify:** Briefly explain WHY this model fits (e.g., "Use LRM because math requires chain-of-thought").

#TASK CRITERIA:

- Prioritize **LRM** for any step involving money, law, or math.

- Prioritize **MoE** or **SLM** for high-volume text summarization to save money.

- Prioritize **VLM** for anything involving files/images.

#INFORMATION ABOUT ME:

- My Breakdown Table: [PASTE OUTPUT FROM PROMPT #1]

#RESPONSE FORMAT:

A list of "Architecture Assignments" in this format:

**Step X:** [Task Name]

**Architecture:** [Type]

**Recommended Model:** [Specific Model Name]

**Reasoning:** [Why]Input needed:

The table from Prompt #1.

Output you'll get:

A strategic plan telling you to use Claude (VLM) for the invoice reading and o1 (LRM) for the math check, ensuring 100% accuracy where it counts.

⚙️ PROMPT #3: THE HLM MANAGER SCRIPT

💡 What this prompt does: This creates the HLM (Hierarchical Language Model) prompt—the "Boss" agent.

This prompt instructs a main model (like GPT-4 or Claude 3.5 Sonnet) on how to manage the other agents. It gives the "Boss" the instructions to route tasks to the "Workers."

#CONTEXT:

You are a Prompt Engineering Expert specializing in HLM (Hierarchical Language Model) systems. You need to create a "System Prompt" for a "Manager Agent." This Manager Agent will not do the work itself; it will act as a Router/Planner that delegates tasks to specialized sub-agents based on the architecture plan we created.

#ROLE:

You are the "Master Orchestrator." Your job is to write the system prompt for the Manager Agent.

#RESPONSE GUIDELINES:

1. Write a system prompt that tells the AI: "You are the Manager. Your goal is to oversee [Project Name]."

2. Define the "Sub-Agents" available based on the user's plan (e.g., "You have a Vision Agent and a Math Agent").

3. Define the "Routing Logic": Tell the Manager when to call which agent.

4. Include "Output Aggregation": Instruct the Manager how to combine the results into a final answer.

#TASK CRITERIA:

- The generated prompt must be strict. The Manager should NOT try to do visual tasks itself; it MUST delegate to the Vision Agent.

- Use XML tags for structure (e.g., <sub_agents>).

#INFORMATION ABOUT ME:

- My Architecture Plan: [PASTE OUTPUT FROM PROMPT #2]

#RESPONSE FORMAT:

A code block containing the full "System Prompt" for the Manager Agent.Input needed:

The Model Assignments from Prompt #2.

Output you'll get:

A master prompt you can paste into your main chat window (or automation builder like Make/Zapier) that makes the AI act as a "Project Manager," coordinating the specialized tasks.

📋 SUMMARY 📋

Single-model agents are dead. They can't do math and write poetry at the same time.

The 8-Model Framework gives you a toolkit: VLM for eyes, LRM for brains, LAM for hands.

The HLM approach (Prompt #3) creates a "Manager" to oversee the specialists.

You now have a production-grade architecture map, not just a chat window.

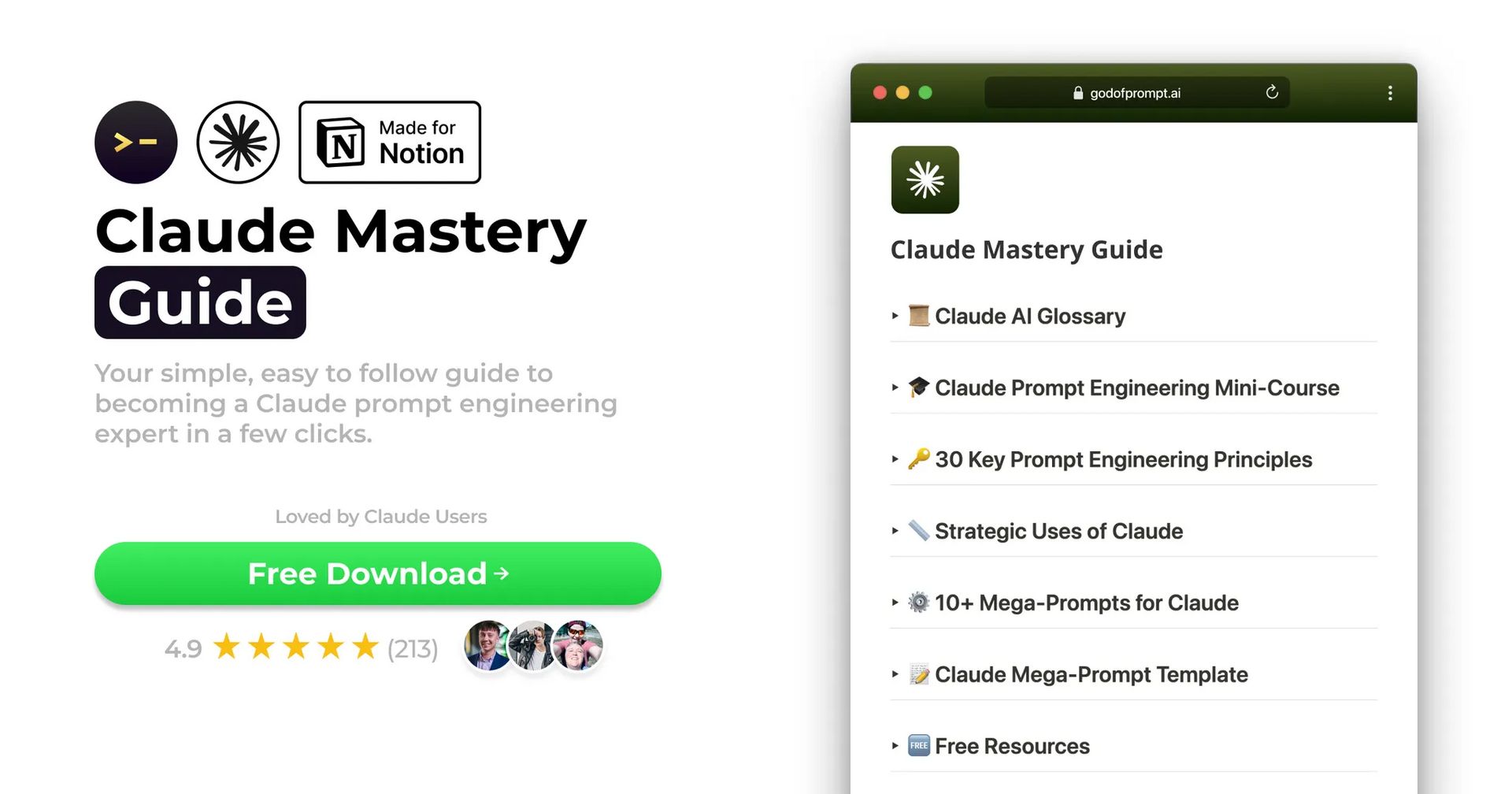

📚 FREE RESOURCES 📚

📦 WRAP UP 📦

What you learned today:

The 8 Architectures - From MoE (Speed) to LRM (Thinking).

How to Deconstruct - Breaking workflows into "Cognitive Steps."

The HLM Strategy - Using a "Manager AI" to route tasks to "Specialist AIs."

This workflow transforms "hoping ChatGPT gets it right" into "engineering a system that can't fail."

No more hallucinations.

You now have a multi-brain system.

What did you think about today's edition?

And as always, thanks for being part of my lovely community,

Keep building systems,

🔑 Alex from God of Prompt

P.S. Which of the 8 architectures do you want a deep dive on next? The "Action Models" (LAMs) are getting crazy...